To achieve this just rename layer name. You may decide and try not to copy other layers (eg.

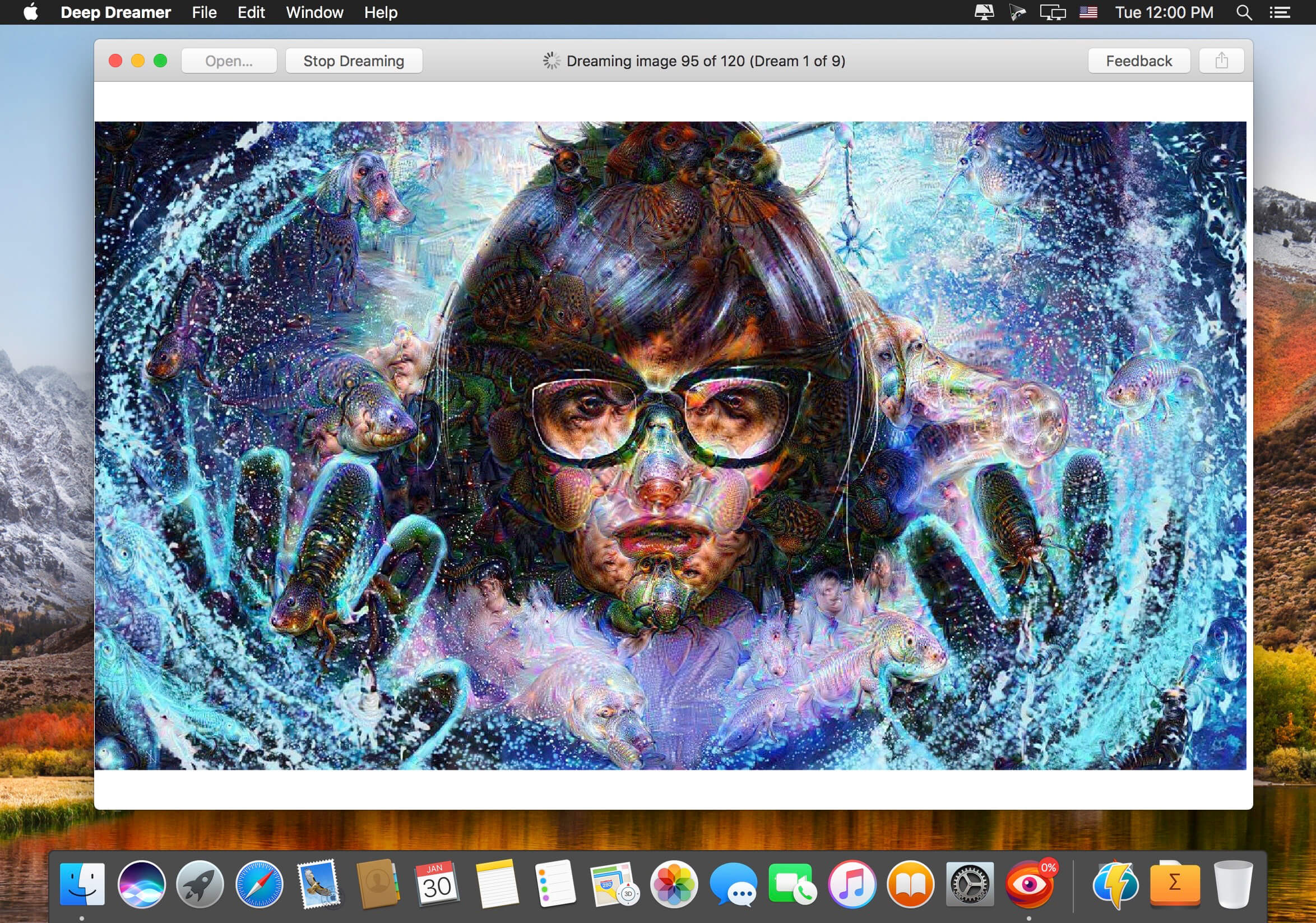

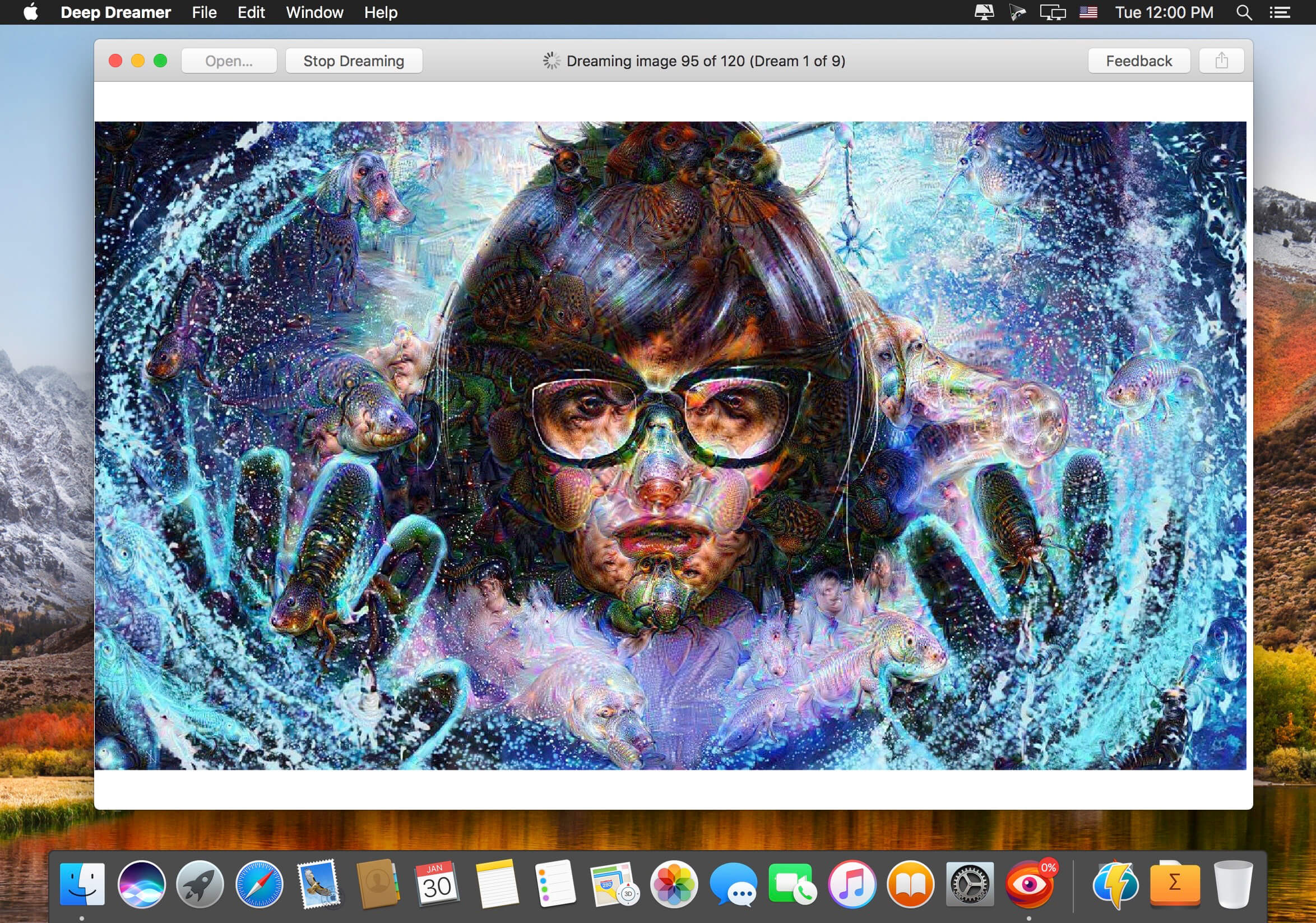

Fine tune method means that you copy almost all information into your net from existing net except the layers called “classification” (three of them). But you have high probability to see something new and no dogs You have little chance to get your image set visible in deepdreams images before 100k of iterations. DeepDickDream guy made 750k iterations to have dicks on Hulk Hogan ( ) I usually stop after 40k of iterations. On CPU calculations were 40 times slower. if none of above strategy works – probably you have troubles and train failed. if you see ‘loss’ stuck at near some value and lowers but very slowly – break and set base_lr 5 times more than current. if you see ‘loss’ value during training is higher and higher – break and set base_lr 5 times less than current. During the training it should be in average lower and lower and go towards 0.0. Strategy for base_lr in solver for first 1000 iterations. To restart training use a snapshot running this command. First results should be visible on inception_5b/output layer. You can break training then and run deepdream on your net. Every 5000 iterations you’ll get snapshot. bvlc_googlenet/bvlc_googlenet.caffemodel Go to the working folder and run this command: #Running deep dreamer on windows download#

Go to caffe/models/bvlc_googlenet and download this file (save it here) Almost ready to train… but you need googlenet yet.It’s important if you want to break training in the middle. snapshot: 5000 – how often create snapshot and network file (here, every 5000 iterations).max_iter: 200000 – how many iterations for training, you can put here even million.You’ll be observing loss value and adapt base_lr regarding results (see strategy below) base_lr: 0.0005 – learning rate, it’s a subject to change.

display: 20 – print statistics every 20 iterations.solver.prototxt, what is important below:.num_output should be set to number of your categories (number of your folders in image folder) You’ll get out of memory error if number is too high, then just lower it. You need probably change it to 20 for 2gb gpu. line 19, define number of images processed at once.If you don’t know them just set all to 129 For blue, green and red channels respectively (mind the reverse order).

lines 13-15 (and 34-36) define mean values for your image set. Next you need to edit all files, let’s start. Copy deploy.prototxt, train_val.prototxt and solver.prototxt into working folder from this link:. Every line of this file should be relative path of the image with the number of the image category. Create text file called train.txt (and put it to the working folder). So you end up with several folders with single image inside. For example ‘images/0/firstimage.jpg’, ‘images/1/secondimage.jpg’, etc… Every folder is a category. For every image you have create separate folder in ‘images’. Create folder named ‘images’ (in your working folder, MYNET). All folders and files you’ll create will be placed in MYNET Create folder /models/MYNET <- this will be your working folder. I use command line tools convert and identify from ImageMagick: convert *.jpg -average res.png identify -verbose res.png to see ‘mean’ for every channel. You need to know what is average value of red, green and blue of your set. OPTION: Calculate average color values of all your images. Save it as truecolor jpgs (not grayscale, even if they are grayscale) Resize all images into dimension of 256×256. Faces, porn, letters, animals, guns, etc. I found that one type of images work well. The hardest part: download 200-1000 images you want to use for training. Building net from the scratch requires time, a lot of time, hundreds of hours… Read it first. Forget about training from the scratch, only fine tune on googlenet.

lines 13-15 (and 34-36) define mean values for your image set. Next you need to edit all files, let’s start. Copy deploy.prototxt, train_val.prototxt and solver.prototxt into working folder from this link:. Every line of this file should be relative path of the image with the number of the image category. Create text file called train.txt (and put it to the working folder). So you end up with several folders with single image inside. For example ‘images/0/firstimage.jpg’, ‘images/1/secondimage.jpg’, etc… Every folder is a category. For every image you have create separate folder in ‘images’. Create folder named ‘images’ (in your working folder, MYNET). All folders and files you’ll create will be placed in MYNET Create folder /models/MYNET <- this will be your working folder. I use command line tools convert and identify from ImageMagick: convert *.jpg -average res.png identify -verbose res.png to see ‘mean’ for every channel. You need to know what is average value of red, green and blue of your set. OPTION: Calculate average color values of all your images. Save it as truecolor jpgs (not grayscale, even if they are grayscale) Resize all images into dimension of 256×256. Faces, porn, letters, animals, guns, etc. I found that one type of images work well. The hardest part: download 200-1000 images you want to use for training. Building net from the scratch requires time, a lot of time, hundreds of hours… Read it first. Forget about training from the scratch, only fine tune on googlenet.

Ok, so, you’re bored, have spare time, have working caffe on gpu and want to try train network to get rid of dogs in deep dream images… Here is tutorial for you. Give me an info if something is unclear or bullshit. Originaly posted on FB Deep Dream // Tutorials group under this link: A little bit messy.

0 kommentar(er)

0 kommentar(er)